Google is trying to make a splash with Gemini, a new generative AI platform that recently made its big debut. But while Gemini looks promising in some ways, it falls short in others. So what is Gemini? How can it be used? And how does it compare to the competition?

This guide, updated as new Gemini models and features are released, seeks to answer these questions

What is Gemini

Gemini Google's long-standing promise regarding a family of next-generation generative AI models, developed by Google's AI research labs, DeepMind and Google Research. Comes in three flavors:

- Gemini Ultra Gemini's flagship model

- Professional Gemini a Gemini “lite” model

- Gemini Dwarf a smaller “distilled” model that runs on mobile devices like the Pixel 8 Pro

All Gemini models were trained to be “natively multimodal”; in other words, able to work with and use more than just text. They were pre-trained and tuned on a variety of audio, images and videos, a large set of code bases and text in different languages.

This distinguishes Gemini from models like Google's LaMDA large language model, which was only trained on text data. LaMDA cannot understand or generate anything other than text (e.g. essays, draft emails, etc.), but that is not the case with Gemini models. Its ability to understand images, audio, and other modalities is still limited, but it's better than nothing.

What is the difference between Bard and Gemini

Google, proving once again that it lacks branding skills, did not make it clear from the beginning that Gemini is independent and distinct from Bard. Bard is simply an interface through which certain Gemini models can be accessed; Think of it as an application or client for Gemini and other Generative AI models. Gemini, on the other hand, is a family of models, not an application or an interface. There is no standalone Gemini experience, nor likely will there ever be. If compared to OpenAI products, Bard corresponds to ChatGPT, OpenAI's popular conversational AI application, and Gemini corresponds to the language model that powers it, which in the case of ChatGPT is GPT-3.5 or 4.

By the way, Gemini is also completely independent of Image-2, a text-to-image conversion model that may or may not fit into the company's overall AI strategy. Don't worry, you're not the only one confused by this!

What Gemini can do

Because Gemini models are multimodal, they can theoretically perform a variety of tasks, from transcribing speech to captioning images and videos to generating artwork. Few of these capabilities have reached the product stage yet, but Google promises all of them, and more, at some point in the not-too-distant future.

Of course, it's a little hard to believe the company at the initial launch moment.

Google more than delivered on the original release of Bard. And most recently it caused a stir with a video purporting to show off Gemini's capabilities that turned out to be heavily manipulated and more or less aspirational. Gemini es credit to the tech giant, it is available in some form today, but quite limited.

Still, assuming Google is more or less truthful with its claims, here's what the different levels of Gemini models will be able to do once they launch:

Gemini Ultra

Few people have so far gotten Gemini Ultra, the “base” model on which the others are built: just a “select set” of customers in a handful of Google apps and services. That won't change until later this year, when Google's larger model launches more widely. Most of the information about Ultra comes from product demos run by Google, so it's best to take it with a grain of salt.

Google says Gemini Ultra can be used to help with things like physics homework, solving step-by-step problems on a worksheet, and pointing out potential errors in already completed answers. Gemini Ultra can also be applied to tasks such as identifying scientific articles relevant to a particular problem, Google says, extracting information from those articles and “updating” a graph of one by generating the formulas necessary to recreate the graph with more recent data.

Gemini Ultra technically supports imaging, as mentioned above. But that capability won't make it to the production version of the model at launch, according to Google, perhaps because the mechanism is more complex than the way apps like ChatGPT generate images. Instead of sending cues to an image generator (such as DALL-E 3, in the case of ChatGPT), Gemini generates images “natively” without an intermediate step.

Professional Gemini

Unlike Gemini Ultra, Gemini Pro is publicly available today. But confusingly, its capabilities depend on where it is used.

Google says that in Bard, where Gemini Pro was first launched in text-only format, the model is an improvement over LaMDA in its reasoning, planning and understanding abilities. In a independent study Researchers from Carnegie Mellon and BerriAI found that Gemini Pro is actually better than OpenAI's GPT-3.5 at handling longer, more complex chains of reasoning.

But the study also found that, like all large language models, Gemini Pro has special difficulty with math problems involving multiple digits, and users have encountered many examples of poor reasoning and errors. He made a lot of factual errors in simple queries like who won the last Oscars. Google has promised improvements, but it's unclear when they will arrive.

Gemini Pro is also available via API on Vertex AI, Google's fully managed AI development platform, which accepts text as input and generates text as output. An additional endpoint, Gemini Pro Vision, can process text and images, including photos and videos, and output text along the lines of OpenAI's GPT-4 with Vision model.

Using Gemini Pro on Vertex AI.

Within Vertex AI, developers can customize Gemini Pro for specific contexts and use cases through a tuning or “grounding” process. Gemini Pro can also connect to external third-party APIs to perform particular actions.

Sometime in “early 2024,” Vertex customers will be able to leverage Gemini Pro to power custom conversational voice and chat agents (i.e., chatbots). Gemini Pro will also become an option to power search summary, recommendation and response generation functions in Vertex AI, based on documents in all modalities (e.g. PDF, images) from different sources (e.g. OneDrive, Salesforce) to satisfy queries.

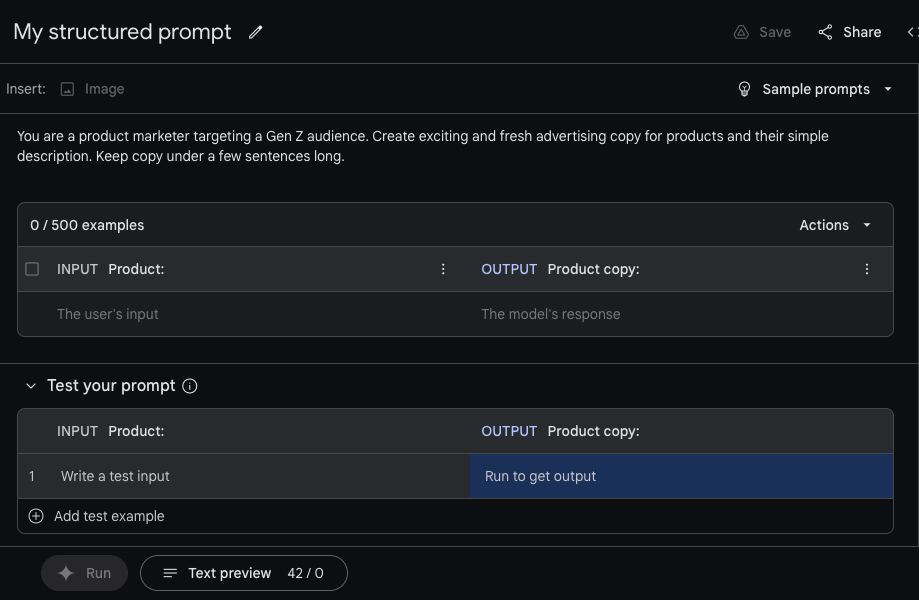

In AI Studio, Google's web tool for app and platform developers, there are workflows for creating free-form, structured chat messages using Gemini Pro. Developers have access to the Gemini Pro and Gemini Pro Vision endpoints, and can adjust the «temperature» of the model to control the creative range of output and provide examples to give tone and style instructions, and also adjust safety settings.

Gemini Nano

Gemini Nano is a much smaller version of the Gemini Pro and Ultra models, and is efficient enough to run directly on (some) phones instead of sending the task to a server. So far, it includes two features on the Pixel 8 Pro: summarize on the recorder and smart reply on Gboard.

The Recorder app, which allows users to press a button to record and transcribe audio, includes a Gemini-powered summary of your recorded conversations, interviews, presentations, and other snippets. Users get these summaries even if they don't have a signal or Wi-Fi connection available and, in a nod to privacy, no data leaves their phone in the process.

Gemini Nano is also on Gboard, Google's keyboard app, in a developer preview. There, activate a feature called Smart Reply, which helps suggest what you want to say next when you have a conversation in a messaging app. Initially, the feature only works with WhatsApp, but it will come to more apps in 2024, Google says.

Is Gemini better than OpenAI's GPT-4

There is no way to know how the Gemini family works. Actually It won't be known until Google releases Ultra later this year, but the company has claimed improvements in the current version, bringing it closer to OpenAI's GPT-4.

Google has touted Gemini's superiority in benchmarks several times, stating that Gemini Ultra outperforms current results in "30 of the 32 academic benchmarks widely used in the research and development of large language models." Meanwhile, the company says the Gemini Pro is more capable of performing tasks like summarizing content, generating ideas, and writing than GPT-3.5.

But leaving aside the question of whether the benchmarks actually indicate a better model, the scores Google points out appear to be only marginally better than the corresponding OpenAI models. And, as mentioned above, some of the first impressions have not been very good, as users and academics They point out that Gemini Pro tends to get basic data wrong, has difficulties with translations, and offers poor coding suggestions.

How much will Gemini cost?

Gemini Pro is free to use on Bard and, for now, on AI Studio and Vertex AI.

However, once the Gemini Pro comes out of preview on Vertex, the model will cost $0,0025 per character, while the release will cost $0,00005 per character. Vertex customers pay per 1.000 characters (between 140 and 250 words) and, in the case of models like the Gemini Pro Vision, per image ($0,0025).

Suppose a 500-word article contains 2000 characters. Summarizing that article with Gemini Pro would cost $5. On the other hand, generate an item of similar length would cost $0,1.

Where you can try Gemini

Gemini Professional

The easiest place to experience Gemini Pro is Bard. An improved version of Pro is answering text-based Bard queries in English in the US right now, with additional languages and countries supported coming in the future.

Gemini Pro can also be accessed in preview on Vertex AI via an API. The API is free to use “within limits” at the moment and supports 38 languages and regions, including Europe, as well as features such as chat and filtering.

Elsewhere, Gemini Pro can be found in AI Studio. By using the service, Developers can iterate prompts and chatbots based on Gemini and then obtain API keys to use in your applications, or export the code to a more feature-rich IDE.

Duet AI for developers, is Google's set of AI-powered support tools for completing and generating code, will begin using a Gemini model in the coming weeks. Google plans to bring Gemini models to development tools for Chrome and its Firebase mobile development platform around the same time, in early 2024.

Gemini Dwarf

Gemini Nano is on the Pixel 8 Pro and will come to other devices in the future. Developers interested in incorporating the model into their Android apps can register for a first view.