AI is present in seemingly every aspect of modern life, from music and media to business and productivity, even personal relationships. There's so much going on that it can be hard to keep up, so here's a brief overview of concepts, from the latest big developments to the terms and companies you need to know to stay current in this fast-changing field.

To begin with, we all need to be on the same page: what is AI?

La Artificial Intelligence, also called Machine Learning (ML), is a type of software system based on neural networks, a technique that was actually pioneered decades ago but has recently flourished thanks to powerful new computing resources. AI has enabled effective speech and image recognition, as well as the ability to generate synthetic images and voice, and researchers are hard at work making it possible for an AI to navigate the web, make reservations, modify recipes, and more.

This AI guide is divided into three parts that can be read in any order:

- First, the fundamental concepts that need to be known, including the most important recently.

- Now,, an overview of the major AI players and why they matter.

- And finally, a curated list of recent headlines and developments.

AI 101

One of the interesting things about AI is that while the basic concepts date back more than 50 years, few of them were familiar even to techies before very recently. If you have the feeling of being lost, don't worry, almost everyone is.

And another concept to value: although it is called "artificial intelligence", that term is a bit misleading. There is no single definition of intelligence, but what these systems do is definitely closer to calculators than the brain. The input and output of this calculator is much more flexible. You could think of artificial intelligence as an artificial simulation: it's imitation intelligence.

With that being said, these are basic terms found in any AI discussion.

Neural networks

Our brains are made largely of interconnected cells called neurons, which come together to form complex networks that perform tasks and store information. Attempts have been made to recreate this incredible system in software since the 60s, but the required processing power wasn't widely available until 15 to 20 years ago, when GPUs enabled digitally defined neural networks to flourish. At its heart, it's just a lot of points and lines: the points are data, and the lines are statistical relationships between those values. Just like in the brain, this can create a versatile system that quickly takes input, passes it through the network, and produces output. This system is called model.

Model

El model is the actual collection of code that accepts input and returns output. The similarity in terminology to a statistical model or modeling system that simulates a complex natural process is not accidental. In AI, model can refer to an entire system like ChatGPT, or pretty much any AI or machine learning construct, regardless of what it does or produces. Models come in various sizes, which means both the amount of storage space they take up and the computational power they need to run. And these depend on how the model is trained.

Preparation / Training

To create an AI model, the neural networks that form the basis of the system are exposed to a large amount of information in what is called data set or corpus. By doing so, these giant networks create a statistical representation of that data. This training process is the most computationally intensive part, meaning it takes weeks or months (it can last as long as required) on huge arrays of high-powered computers. The reason for this is that not only are the networks complex, but the data sets can be extremely large: billions of words or images that need to be analyzed and represented in the giant statistical model. On the other hand, once the model is done cooking it can be much smaller and less demanding when used, this process is called inference.

Inference

When the model is actually doing its job, we call it inference, largely in the traditional sense of the word: drawing a conclusion by reasoning on the available evidence. Of course, it's not exactly "reasoning" but rather statistically connecting the dots in the data you've ingested and actually predicting the next dot. For example, saying "Complete the following sequence: red, orange, yellow..." would find that these words correspond to the beginning of a list that it has ingested, the colors of the rainbow, and infer the next element until it has produced the rest of that list. . Inference is generally much less computationally expensive than training: Think of it like looking at a catalog of cards instead of assembling it. The big models still need to run on supercomputers and GPUs, but the smaller ones can run on a smartphone or something even simpler.

Generative AI

Everyone talks about generative AI, and this broad term just means an AI model that produces an original result, such as an image or text. Some AI summarize, some rearrange, some identify, etc., but an AI that actually generates something (whether it "believes" or not is debatable) is especially popular right now. Don't forget that just because an AI generated something, that doesn't mean it's correct, or even reflects reality at all. It just didn't exist before you asked for it, like a story or a painting.

current terms

Beyond the basics, these are the most relevant AI terms in mid-2023.

Large Language Model (LLM)

The most influential and versatile form of AI available today, large language models are trained on nearly all the text that makes up the web and much of the English-language literature. Ingesting all of this results in a massive base model. LLMs can converse and answer questions in natural language and mimic a variety of styles and types of written documents, as demonstrated by ChatGPT, Claude, and LLaMa. While these models are undeniably impressive, it should be noted that they are still pattern recognition engines, and when they respond, it is an attempt to complete a pattern you have identified, whether or not that pattern reflects reality. LLMs often hallucinate in their answers, which we'll get to shortly.

Foundational Model

Training a huge model from scratch on large data sets is expensive and complex, so you don't want to have to do it more than necessary. Base models are the largest from scratch that need supercomputers to run, but can be trimmed down to fit in smaller containers, usually reducing the number of parameters. You can think of these as the total points the model has to work with, and these days it can be in the millions, billions, or even trillions.

Model Fine Tuning

A basic template like GPT-4 is smart, but it's also generalist by design: it's absorbed everything from Dickens to Wittgenstein to the Dungeons & Dragons rules, but none of it is useful if you want it to help write a cover letter for a curriculum. Fortunately, the models can be fine-tuned by giving them a bit of additional training using a specialized dataset, say a few thousand job applications lying around. This gives the model a much better idea of how to help in that domain without throwing away the general knowledge it has gathered from the rest of your training data.

Reinforcement learning from human feedback, or RLHF, is a special type of fine-tuning that you hear a lot about: it uses data from humans interacting with the LLM to improve their communication skills.

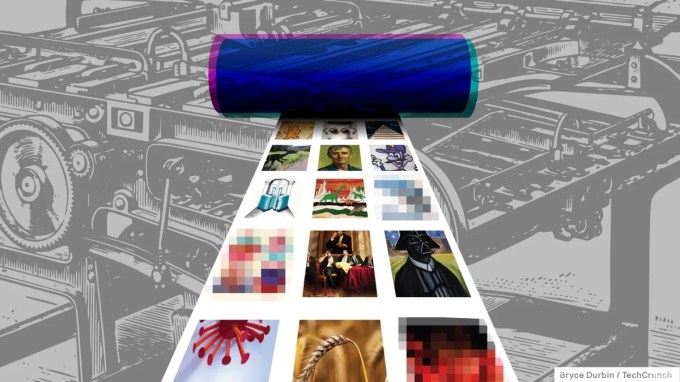

Outreach

Image generation can be done in many ways, but the most successful so far is diffusion, which is the technique at the heart of Stable Diffusion, Midjourney, and other popular generative AIs. Diffusion models are trained by showing them images that are gradually degraded by adding digital noise until nothing of the original remains. By observing this, diffusion models also learn to do the reverse, gradually adding detail to the pure noise to form an arbitrarily defined image. We're already starting to move beyond this for imaging, but the technique is reliable and relatively well understood, so don't expect it to go away anytime soon.

hallucination

Originally, this was an issue of certain images in training slipping into an unrelated outlet, such as buildings that looked like they were made of dogs due to an over-prevalence of dogs on the training set. Now an AI is said to be hallucinating when, because you have insufficient or conflicting data in your training set, you just make something up.

This can be either an asset or a liability; an AI asked to create original or even derivative art is hallucinating its output; an LLM can be told to write a love poem in the style of Yogi Berra, and it will happily do so—despite such a thing not existing anywhere in its dataset. But it can be an issue when a factual answer is desired; models will confidently present a response that is half real, half hallucination. At present there is no easy way to tell which is which except checking for yourself, because the model itself doesn't actually know what is “true” or “false,” it is only trying to complete a pattern as best it can.

Strong Artificial Intelligence, AIF, (Artificial General Intelligence, AGI)

Artificial General Intelligence, or strong AI, is not really a well-defined concept, but the simplest explanation is that it is intelligence that is powerful enough not only to do what people do, but to learn and improve as we do. we. Some worry that this cycle of learning, integrating those ideas, and then learning and growing faster will be self-perpetuating and result in a super-intelligent system that is impossible to restrain or control. Some have even proposed delaying or limiting research to prevent this possibility.

It's a terrifying idea, sure, and movies like "The Matrix" and "Terminator" have explored what could happen if AI gets out of control and tries to eliminate or enslave humanity. But these stories are not based on reality. The semblance of intelligence we see in things like ChatGPT is an impressive act, but it has little in common with the abstract reasoning and dynamic multi-domain activity we associate with "real" intelligence. While it's almost impossible to predict how things will progress, it can be helpful to think of AGI as something like interstellar space travel: we all understand the concept and are apparently working towards it, but at the same time we're incredibly far from achieving anything like it. And because of the immense resources and fundamental scientific breakthroughs required, no one will suddenly make it by accident!

It's interesting to think about AGI, but there's no point asking to get into trouble when, as commentators point out, AI already presents real and consequential threats today despite its limitations, and indeed largely because of them. Nobody wants Skynet, but you don't need a nuke-armed superintelligence to do real damage: people are losing their jobs and falling for hoaxes today. If we can't solve those problems, what chance do we have against a T-1000?

Main players in AI

OpenAI

If there's a household name in AI, this is it. OpenAI began, as its name suggests, as an organization that intended to conduct research and provide the results in a more or less open manner. Since then, it has restructured itself as a more traditional for-profit company that provides access to its advances in language models like ChatGPT through APIs and apps. It is headed by Sam Altman, a technotopian billionaire who has nonetheless warned of the risks AI could present. OpenAI is the recognized leader in LLM, but also conducts research in other areas.

Microsoft

Unsurprisingly, Microsoft has done a fair share of the work in AI research, but like other companies, it has more or less failed to turn its experiments into major products. His smartest move was to invest quickly in OpenAI, earning him an exclusive long-term partnership with the company, which now powers its Bing conversational agent. Although its own contributions are minor and less immediately applicable, the company has a considerable research presence.

Known for its "trips to nowhere," Google somehow missed the boat on AI even though its researchers literally invented the technique that led directly to the current AI explosion: the transformer. He's now hard at work on his own LLMs and other agents, but he's clearly catching up after spending most of his time and money over the past decade pushing the outdated AI "virtual assistant" concept. CEO Sundar Pichai has repeatedly said that the company is aligning firmly behind AI in search and productivity.

anthropic

After OpenAI moved away from open source, siblings Dario and Daniela Amodei left the group to start Anthropic, intending to play the role of an ethically-considered and open AI research organization. With the amount of cash they have on hand, they are a serious rival to OpenAI even if their models, like Claude, are not as popular or well known yet.

Stability

Controversial but unavoidable, Stability represents the open-source, do-what-you-want school of AI implementation, hoovering everything on the internet and making the generative AI models it trains freely available if you have the hardware to run it. This is very much in line with the “information wants to be free” philosophy, but it has also accelerated ethically dubious projects like generating pornographic images and using intellectual property without consent (sometimes at the same time).

Elon Musk

Not to be left out, Musk has been outspoken about his fears regarding runaway AI, as well as some sour grapes after he contributed to OpenAI early on and went in a direction he didn't like. While Musk is no expert on this topic, as usual, his antics and comments elicit widespread responses (he was one of the signatories to the aforementioned "AI pause" letter) and he is attempting to start his own investigative team.