Last June, just months after the launch of OpenAI's ChatGPT, a pair of New York City lawyers infamously used the tool. write a very poor summary. The AI cited false cases, causing an uproar, an angry judge, and two very embarrassed lawyers. It was proof that while bots like ChatGPT can be useful, you really have to check their work carefully, especially in a legal context.

The case didn't escape the folks at LexisNexis, a legal software company that offers tools to help lawyers find the right case law to make their legal arguments. The company sees the potential for AI to help reduce much of the mundane legal work each attorney performs, but also recognizes these very real problems as it begins its generative AI journey.

Jeff Reihl, CTO of LexisNexis, understands the value of AI. In fact, his company has been incorporating technology into his platform for some time. But being able to add ChatGPT-like functionality to your legal toolbox would help lawyers work more efficiently: it would help with short writing and finding citations faster.

“We, as an organization, have been working with artificial intelligence technologies for several years. “I think what's really different now since ChatGPT came out in November is the opportunity to generate text and the conversational aspects that come with this technology,” Reihl said.

And while he sees this could be a powerful layer on top of the LexisNexis family of legal software, it also comes with risks. "With generative AI, I think we're really seeing the power and limitations of that technology, what it can and can't do well," he said. "And this is where we think that by bringing together our capabilities that we have and the data that we have, along with the capabilities that these large language models have, we can do something that is fundamentally transformative for the legal industry."

The survey says ...

LexisNexis recently surveyed 1.000 lawyers about the potential of generative AI for their profession. The data showed that people were mostly positive, but it also revealed that lawyers recognize the weaknesses of the technology, and that is tempering some of the enthusiasm about it.

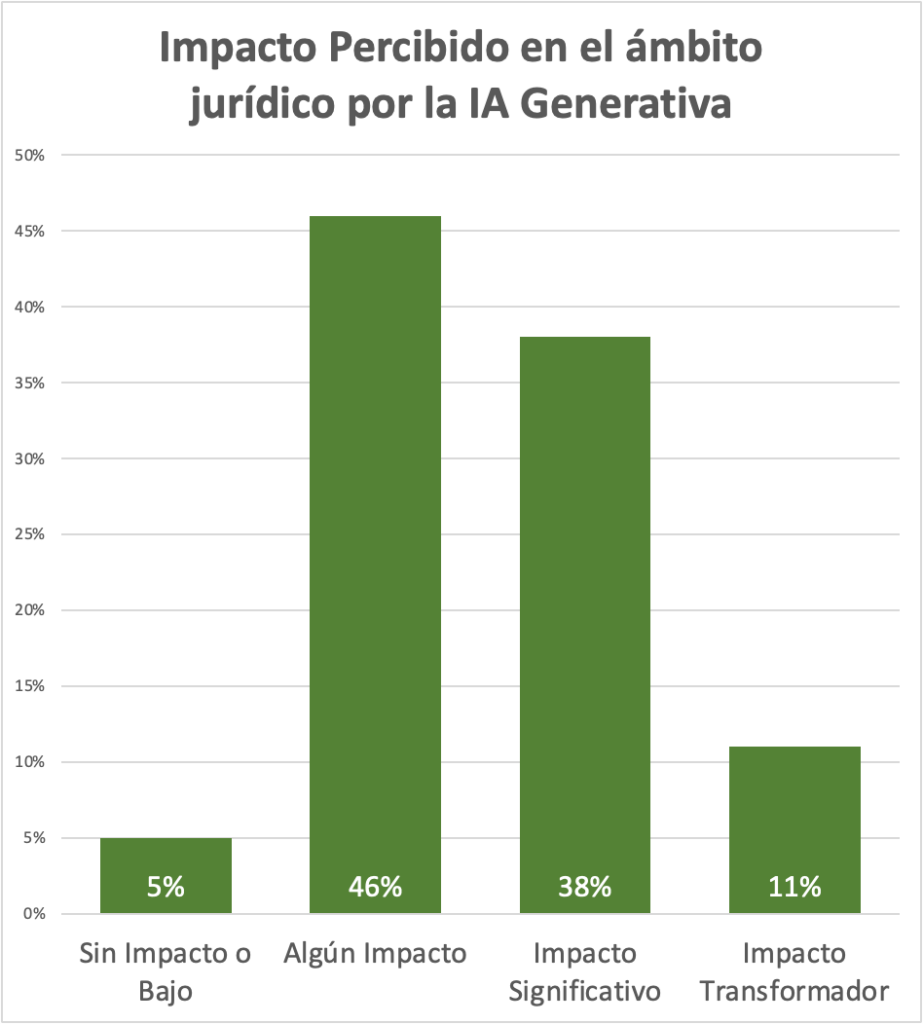

As an example, when asked about the perceived impact of generative AI on their profession, 46% responded with a rather lukewarm response: “some impact.” But the next highest response, at 38%, was “significant impact.” Now, these are subjective opinions of how they might use a technology that is still being evaluated and being hyped up with a lot of hype, but the results show that the majority of attorneys surveyed at least see the potential for generative AI to help. They do their job.

Keep in mind that there is always room for bias in terms of question types and data interpretations when it comes to surveys conducted by providers, but the data is useful to see in general terms how lawyers think about the technology.

LexisNexis Lawyer Survey Question exploring the perceived impact of AI on the practice of law.

Image credits: LexisNexis

One of the key issues here is trusting the information you get from the bot, and LexisNexis is working on several ways to build trust in user results.

Address known AI issues

The trust issue is one that all generative AI users will encounter, at least for the time being, but Reihl says his company recognizes what's at stake for its customers.

"Given their role and what they do as a profession, what they do has to be 100% accurate," he said. "They need to make sure that if they cite a case, it is actually still good law and represents their clients in the right way."

“If they do not represent their clients in the best way, they could be disbarred. And we have a track record of being able to provide reliable information to our customers.”

Sebastian Berns, a PhD researcher at Queen Mary University of London, said any LLM implemented will cause hallucinations. There is no way to escape it.

“An LLM is typically trained to always produce a result, even when the input is very different from the training data,” he said. “A standard LLM has no way of knowing whether it is able to reliably answer a query or make a prediction.”

LexisNexis is trying to mitigate that problem in several ways. For starters, it involves training models with their own vast legal data set to offset some of the reliability issues we've seen with fundamental models. Reihl says the company is also working with the most current case law in the databases, so there won't be any timing issues like with ChatGPT, which will only train with information from the open web until 2021.

The company believes that using more relevant and timely training data should produce better results. “We can ensure that any case referenced is in our database, so we will not publish a quote that is not a real case because we already have those cases in our own database.”

While it's unclear if any company can completely eliminate hallucinations, LexisNexis is allowing lawyers who use the software to watch the robot find the answer by providing a reference to the source.

“So we can address those limitations that large language models have by combining the power that they have with our technology and giving users references to the case so they can validate it themselves,” Reihl said.

It's important to note that this is a work in progress and LexisNexis is working with six customers right now to refine the approach based on their feedback. The plan is to launch AI-powered tools in the coming months.