It's not that Nvidia is desperate for positive reinforcement after its latest earnings reports, but it's worth noting how well the company's robotics strategy has paid off in recent years. Nvidia invested heavily in this space at a time when incorporating robotics beyond manufacturing still seemed like a pipe dream to many. April marks a decade since the launch of the TK1. NVIDIA This is how he described the offering at that time “Jetson TK1 brings the capabilities of Tegra K1 to developers in a compact, low-power platform that makes development as simple as doing so on a PC.”

This February, the company communicated, “One million developers around the world are now using the Nvidia Jetson platform for cutting-edge AI and robotics to create innovative technologies. In addition, more than 6.000 companies (a third of which are startups) have integrated the platform with their products.”

You'd be hard pressed to find a robotics developer who hasn't spent time with the platform, and frankly, it's remarkable how users range from hobbyists to multinational corporations. That's the kind of diffusion companies like Arduino would kill for.

At the company's huge offices in Santa Clara, the buildings, opened in 2018, are impossible to miss from the San Tomas Highway. In fact, there is a pedestrian bridge that crosses the road and connects the old and new headquarters. The new space is primarily comprised of two buildings: Voyager and Endeavor, comprising 500.000 and 750.000 square feet, respectively.

Between the two is an open-air walkway lined with trees, beneath large crisscrossing trellises supporting solar panels. The South Bay Big Tech headquarters battle has heated up a lot in recent years, but when you're effectively printing money, buying land and building offices is probably the best place to put it. You just have to ask Apple, Google and Facebook.

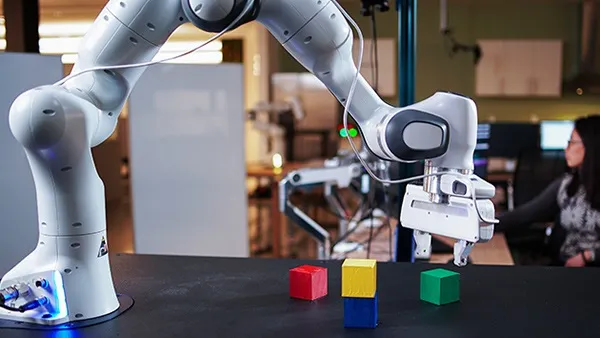

Meanwhile, Nvidia's entry into robotics has benefited from all sorts of blessings. The company knows silicon as well as anyone right now, from design and manufacturing to creating low-power systems capable of performing increasingly complex tasks. This is essential for a world that is increasingly investing in AI and ML. Meanwhile, Nvidia's extensive gaming knowledge has proven to be a great asset for Isaac Sim, its robotics simulation platform. It's a perfect storm, indeed.

Speaking at SIGGRAPH in August 2023, CEO Jensen Huang explained: “We realized that rasterization was reaching its limits. 2018 was a “bet the company” moment. It required us to reinvent the hardware, the software, the algorithms. And while we were reinventing CG with AI, we were reinventing the GPU for AI.”

After a few demonstrations, Deepu Talla, vice president and general manager of Embedded & Edge Computing at Nvidia, pointed out a Cisco teleconferencing system on the back wall running the Jetson platform. It's a far cry from the typical AMRs we tend to think of when we think of Jetson.

"Most people think of robotics as something physical that usually has arms, legs, wings or wheels, which is considered inside-out perception," he said, referring to the office device. “Just like humans. Humans have sensors to see our surroundings and become aware of the situation. There is also something called outside-in robotics. Those things don't move. Imagine you had cameras and sensors in your building. They are able to see what is happening. We have a platform called Nvidia Metropolis. “It has video analytics and adapts to traffic intersections, airports and retail environments.”

What was the initial reaction when the Jetson system was shown in 2015? It came from a company that most people associate with gaming.

Yes, although that is changing. But it was the right thing to do. This is what most consumers are used to. AI was still new, you had to explain what use case you were understanding. In November 2015, Jensen [Huang] and I went to San Francisco to present some songs. The example we had was an autonomous drone. If you wanted to make an autonomous drone, what would it take? You would need to have so many sensors, you need to process so many frames, you need to identify this. We did some rough calculations to identify how many calculations we would need. And if you want to do it today, what is your option? There was nothing like that at the time.

How did Nvidia's gaming history influence your robotics projects?

When we founded the company, games were what funded us to build GPUs. We then added CUDA to our GPUs so it could be used in non-graphics applications. CUDA is essentially what got us to AI. Now AI is helping games, thanks to ray tracing, for example. After all, we are building microprocessors with GPUs. All this middleware we talk about is the same. CUDA is the same for robotics, high-performance computing, and cloud AI. Not everyone needs to use all the features of CUDA, but it's the same.

How does Isaac Sim compare to the [Open Robotics’] simulator?

Gazebo is a good basic simulator for doing limited simulations. We are not trying to replace Gazebo. Gazebo is good for basic tasks. We provide a simple ROS bridge to connect Gazebo with Isaac Sim. But Isaac can do things that no one else can do. It is built on Omniverse. All the things you have in Omniverse come to Isaac Sim. It's also designed to connect any AI mode, any framework, all the things we do in the real world. You can connect it to obtain more complete autonomy. It also has visual fidelity.

You are not looking to compete with ROS (Robot Operating System).

No no. We are trying to build a platform. We want to connect with everyone and help others leverage our platform just as we leverage theirs. There is no point in competing.

Are you working with research universities?

Absolutely. Dieter Fox is the head of robotics research at Nvidia. He is also a professor of robotics at the University of Washington. And many of our research members also have dual affiliations. In many cases they are affiliated with universities. We publish to the entire community. When you're doing research, it has to be open.

Are you working with end users on things like deployment or fleet management?

Probably not. For example, if John Deere sells a tractor, farmers do not talk to us. Typically, fleet management is. We have tools to help them, but fleet management is carried out by whoever provides the service or builds the robot.

When did robotics become a piece of the puzzle for Nvidia?

I would say the early 2010s. That's when AI emerged. I think the first time deep learning came into the world was in 2012. It was believed a recent profile about Bryan Catanzaro. He immediately said in LinkedIn, “I didn't actually convince Jensen, but simply explained deep learning to him. He instantly formed his own conviction and turned Nvidia into an artificial intelligence company. It was inspiring to see him and sometimes I still can't believe he was able to be there to witness Nvidia's transformation.”

2015 was when we started with AI not only for the cloud, but also for EDGE for both Jetson and autonomous driving.

When you talk about generative AI with people, how do you convince them that it's more than just a fad?

I think it speaks in the results. You can already see the improvement in productivity. You can compose an email for me. It's not exactly correct, but I don't have to start from scratch. He's giving me 70%. There are obvious things that you can already see that are definitely a better step feature than how things were before. Summarizing something is not perfect. I'm not going to let you read it and summarize it for me. Therefore, some signs of productivity improvements can already be seen.