AI startup Anthropic, backed by Google and hundreds of millions in venture capital (and perhaps soon hundreds of millions more), announced the latest version of their GenAI technology, Claude. And the company claims that it surpasses OpenAI's chatbot, GPT-4, in terms of performance.

Claude 3, as Anthropic's new GenAI is called, is a family of models: Claude 3 Haiku, Claude 3 Sonnet and Claude 3 Opus, with Opus being the most powerful. All show "increased capabilities" in analysis and forecasting, Anthropic claims, as well as improved performance on specific benchmarks compared to models like ChatGPT and GPT-4 (but not GPT-4 Turbo) and Google's Gemini 1.0 Ultra (but not Gemini 1.5 Pro). .

Notably, Claude 3 is Anthropic's first multi-modal GenAI, meaning it can analyze both text and images, similar to some versions of GPT-4 and Gemini. Claude 3 can process photographs, charts, graphs and technical diagrams, extracting PDF files, slideshows and other types of documents.

In a step better than some GenAI rivals, Claude 3 can analyze multiple images in a single request (up to a maximum of 20). This allows you to compare and contrast images, notes Anthropic.

But Claude 3's image processing has limits.

Anthropic has prevented models from identifying people, no doubt wary of the ethical and legal implications. And the company admits that the Claude 3 is prone to errors with “low-quality” images (less than 200 pixels) and has trouble with tasks involving spatial reasoning (for example, reading the face of an analog clock) and object counting. (Claude 3 cannot give exact data). counts of objects in images).

Credits: anthropic

Neither does Claude 3 will generate a work of art. The models strictly analyze images, at least for now.

Whether with text or images, Anthropic says customers can expect the Claude 3 to better follow multi-step instructions and produce structured results in formats such as JSON and converse in languages other than English compared to their predecessors. Claude 3 should also decline to answer questions less frequently thanks to a "more nuanced understanding of the requests," says Anthropic. And soon, models will cite the source of their answers to questions so users can verify them.

"Claude 3 tends to generate more expressive and engaging responses," Anthropic writes in a supporting article. “It is easier to guide and direct compared to our legacy models. Users should find that they can achieve the desired results with shorter, more concise prompts.”

Some of those improvements come from Claude 3's expanded context.

A model's context, or context window, refers to the input data (for example, text) that the model considers before generating results. Models with small context windows tend to “forget” the content of even very recent conversations, leading them to stray off topic, often in problematic ways. As an added advantage, context-rich models can better capture the narrative flow of data they receive and generate contextually richer responses (at least hypothetically).

Anthropic says Claude 3 will initially support a context window of 200.000 tokens, equivalent to about 150.000 words, and that select customers will get a context window of 1 million tokens (~700.000 words). This is on par with Google's newest GenAI model, the Gemini 1.5 Pro mentioned above, which also offers a contextual window of up to one million tokens.

Now, just because Claude 3 is an update on what came before doesn't mean it's perfect.

With a technical document, Anthropic admits that Claude 3 is not immune to the problems that plague other GenAI models, namely biases and hallucinations (i.e. making things up). Unlike some GenAI models, Claude 3 cannot search the web; models can only answer questions using data prior to August 2023. And while Claude is multilingual, he is not as fluent in certain “low-income” languages like English.

But promising updates from Anthropic for Claude 3 are expected in the coming months.

"We do not believe that model intelligence is close to its limits and we plan to release improvements to the Claude 3 model family in the coming months," the company writes in a statement. entry in your blog.

Opus and Sonnet are now available on the web and through Anthropic's API and development console, Amazon's Bedrock platform, and Google's Vertex AI. Haiku will follow later this year.

Here is the price breakdown:

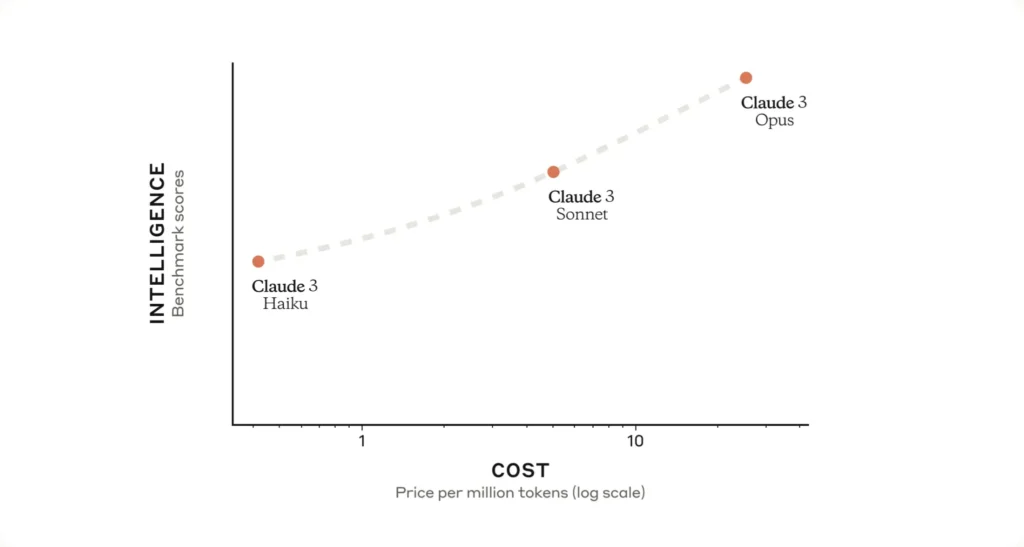

- Opus: $15 per million tokens in, $75 per million tokens out

- Sonnet: $3 per million tokens in, $15 per million tokens out

- Haiku: $0,25 per million tokens in, $1,25 per million tokens out

So that's this Claude 3. But what is a bird's eye view?

Anthropic's ambition is to create a next-generation algorithm for "AI self-learning." Such an algorithm could be used to create virtual assistants that can answer emails, conduct research, and generate art, books, and more, some of which have already been tested with companies just like GPT-4 and other large language models.

Anthropic hints at this in the aforementioned blog post, saying that it plans to add features to Claude 3 that enhance its out-of-the-box capabilities by allowing Claude to interact with other systems, code “interactively,” and offer “advanced agent capabilities.” .”

This last point reminds us releaseby OpenAI that aims to create a software agent to automate complex tasks, such as transferring data from a document to a spreadsheet or automatically completing expense reports and entering them into accounting software (for example). OpenAI already offers an API that allows developers to create “agent-like experiences” in their apps, and Anthropic reportedly intends to offer similar functionality.

Could we see an Anthropic imager next? It would be surprising. Image generators are the subject of a lot of controversy today, mainly for reasons related to copyright and bias. Recently, Google was forced to disable its image generator after injecting diversity into images with a ridiculous disregard for historical context. And several imager vendors are in legal battles with artists who accuse them of profiting from their work by training GenAI in that work without offering compensation or credit.

It will be interesting to see the evolution of Anthropic's technique for training GenAI, "constitutional AI," which the company says makes the behavior of its GenAI easier to understand, more predictable, and easier to adjust as needed. Constitutional AI aims to provide a way to align AI with human intentions, making models answer questions and perform tasks using a simple set of guiding principles. For example, for Claude 3, Anthropic said it added a principle, informed by crowdsourced feedback, that instructs models to be understanding and accessible to people with disabilities.

Whatever the end of Anthropic, it will be long term. According to a leaked presentation in May last year, the company aims to raise up to $5.000 billion in the next 12 months, which could be the foundation it needs to remain competitive with OpenAI. After all, training models don't come cheap. It's on its way, with $2 billion and $4 billion in capital committed by Google and Amazon, respectively, and more than a combined billion from other backers.