For Jae Lee, a data scientist by training, it never made sense that the video – which has become a huge part of our lives, with the rise of platforms like TikTok, Vimeo and YouTube -, difficult to search due to technical barriers to understanding the context. Finding video titles, descriptions, and tags was always pretty easy and required no more than a basic algorithm, but attempting within videos specific moments and scenes was well beyond the capabilities of the technology, particularly if those moments and scenes were not labeled in an obvious way.

To solve this problem, Lee, along with friends from the tech industry, created a cloud service for searching and understanding videos. He turned Twelve Labs, which later raised $17 million in venture capital. Radical Ventures led the extension with participation from Index Ventures, WndrCo, Spring Ventures, Weights & Biases CEO Lukas Biewald, and others, Lee told TechCrunch in an email.

“Twelve Labs' vision is to help developers build programs that can see, hear and understand the world like we do, by giving them the most powerful video understanding infrastructure”Lee said.

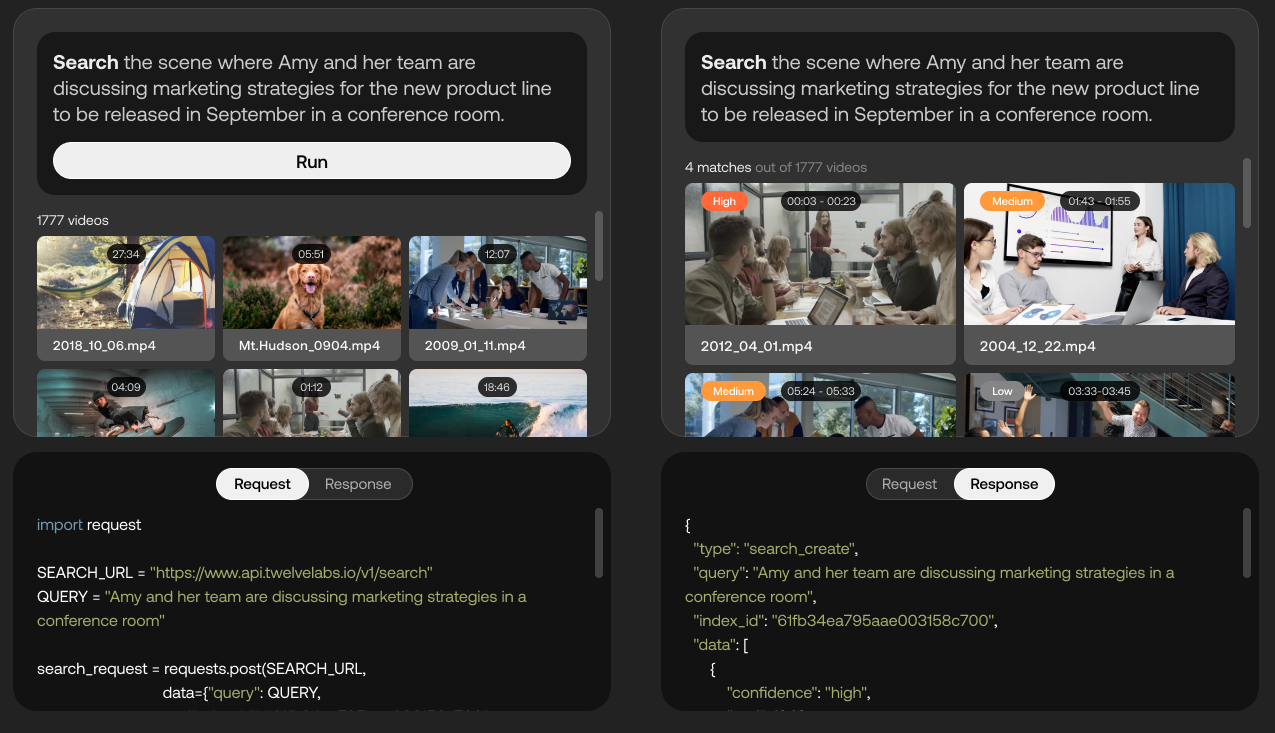

A demonstration of the capabilities of the Twelve Labs platform. Image credits: Twelve Labs

Twelve Labs, which is currently in closed beta, uses AI to try to extract "rich information" from videos such as movement and actions, objects and people, sound, text on the screen, and voice to identify the relationships between them. The platform converts these various elements into mathematical representations called "vectors" and forms "time connections" between frames, enabling applications such as searching for video scenes.

“As part of achieving the company's vision of helping developers create intelligent video applications, the Twelve Labs team is building 'core models' for multimodal video understanding”Lee said. "Developers will be able to access these models through a set of APIs, performing not only semantic searches, but also other tasks such as 'capturing' long-form videos, generating summaries, and video Q&A."

Google takes a similar approach to video understanding with its MUM AI system, that the company uses to boost video recommendations on Google Search and YouTube by curating themes in videos (for example, “acrylic paint materials”) based on audio, text, and image. But while the technology may be comparable, Twelve Labs is one of the first providers to commercialize it; Google has chosen to keep MUM internal and refuses to make it available through a public API.

Having said that, Google, as well as Microsoft and Amazon, offer services (ie Google Cloud Video AI, Azure Video Indexer and AWS Rekognition) that recognize objects, places, and actions in videos and extract rich metadata at the frame level. Also reminiscence, a French computer vision startup that claims to be able to index any type of video and add tags to both recorded and live streamed content. But Lee says Twelve Labs is sufficiently different, in part because its platform allows clients to tailor AI to specific categories of video content.

“What we have found is that AI products built to detect specific problems show high accuracy in their ideal scenarios in a controlled environment, but do not adapt as well to messy real-world data.”Lee said. “They act more like a rule-based system and therefore lack the ability to generalize when variations occur. We also see this as a limitation rooted in a lack of understanding of the context. Understanding context is what gives humans the unique ability to make generalizations across seemingly different situations in the real world, and this is where Twelve Labs excels.”

Beyond the search Lee says Twelve Labs' technology can power things like ad insertion and content moderation, intelligently determining, for example, which videos showing knives are violent versus instructive. It can also be used for real-time commentary and media analytics, he says, and to automatically generate highlight reels from videos.

A little over a year after its founding (March 2021), Twelve Labs has paying customers and a multi-year contract with Oracle to train AI models using Oracle's cloud infrastructure.. Looking ahead, the startup plans to invest in developing its technology and expanding its team.

“For most companies, despite the enormous value that can be achieved through large models, it really doesn't make sense for them to train, operate and maintain these models themselves. By leveraging a platform from Twelve Labs, any organization can take advantage of powerful video compression capabilities with just a few intuitive API calls.”Lee said. “The future direction of AI innovation is heading squarely toward understanding multimodal video, and Twelve Labs is well positioned to push the boundaries even further in 2023.”