Nvidia, always keen to incentivize purchases of its latest GPUs, is launching a tool that allows owners of GeForce RTX Series 30 and Series 40 cards to run an AI-powered chatbot offline on a Windows PC.

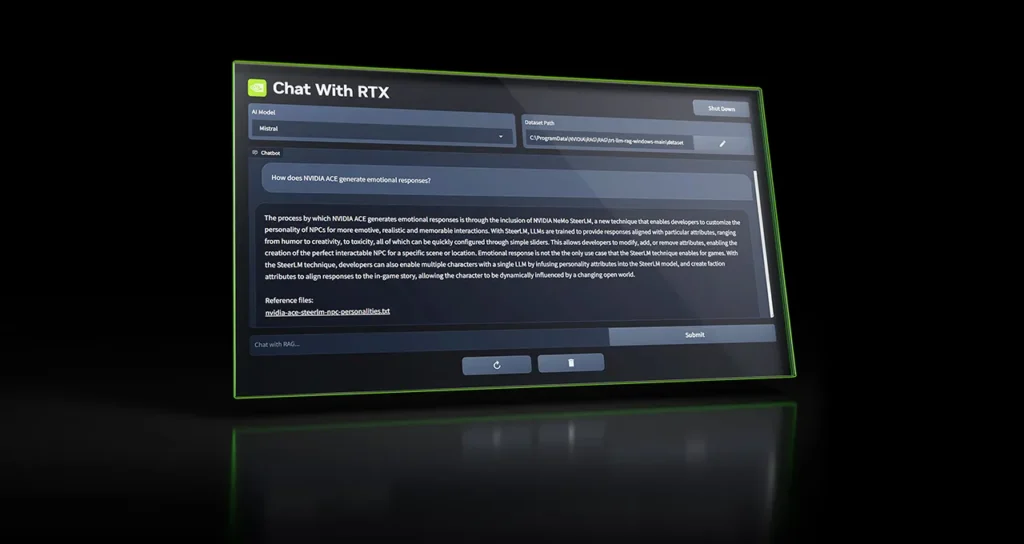

Call Chat with RTX, the tool allows users to customize a GenAI model similar to OpenAI's ChatGPT by connecting it to documents, files, and notes that you can then refer to.

"Instead of searching for notes or saved content, users can simply type queries," Nvidia writes in a blog post. "For example, one might ask, 'What was the restaurant my partner recommended to me while he was in Las Vegas?' and Chat with RTX will scan the local files the user points to and provide the response with context.”

Chat with RTX uses AI startup Mistral's open source model by default, but supports other text-based models, including Meta's own, Llama 2. Nvidia warns that downloading all the necessary files will consume a good amount of storage: 50 GB to 100 GB, depending on the model selected.

Chat with RTX currently works with text, PDF, .doc and .docx and .xml formats. Pointing the application to a folder containing supported files will load the files into the model fine-tuning dataset. Additionally, Chat with RTX can take the URL of a YouTube playlist to upload transcripts of the videos in the playlist, allowing any selected model to view its content.

Now, there are certain limitations to keep in mind, which Nvidia, to its credit and transparency, outlines in a how-to guide.

Image credits: NVIDIA

Chat with RTX doesn't remember context, meaning the app won't take any previous questions into account when answering follow-up questions. For example, if you ask "What is a common bird in North America?" and continues with “What are your colors?” Chat with RTX won't know you're talking about birds.

Nvidia also recognizes that the relevance of app answers can be affected by a variety of factors, some easier to control than others, including the wording of the question, the performance of the selected model, and the size of the tuning data set. . Asking for data covered in a couple of documents is likely to produce better results than asking for a summary of a document or set of documents. And response quality will generally improve with larger data sets, as will targeting Chat with RTX to content on a specific topic, Nvidia says.

So Chat with RTX is more of a toy than anything else to use in production. Still, great point about apps making it easier to run AI models locally, which is a growing trend.

In a recent report, the World Economic Forum predicted “dramatic” growth in affordable devices that can run offline GenAI models, including PCs, smartphones, Internet of Things devices, and networking equipment. The reasons, the WEF said, are the clear benefits: not only are offline models inherently more private (the data they process never leaves the device they run on), but they also have lower latency and are more cost-effective than cloud-hosted models.

Of course, the democratization of tools for running and training models opens the door to malicious actors: a cursory Google search turns up many listings of models fitted with toxic content from unscrupulous corners of the web. But advocates of apps like Chat with RTX argue that the benefits outweigh the harms. It'll have to wait and see.