Usuarios del Club TRPlane

En mi artículo anterior mencionaba el Golem, el nuevo tipo de actor que ha aparecido en nuestra sociedad. Puede pertenecer a un banco, un hospital, una telco, una Administración, pero sobre todo es una amalgama de procesos, sistemas, protocolos con el que ninguno de nosotros puede establecer un diálogo. No existe el lugar donde resida la conciencia del comportamiento real del hospital como un todo. No existe dónde establecer el diálogo específico conmigo.

Según Wikipedia,

Un golem es una personificación […] de un ser animado fabricado a partir de materia inanimada […] El golem es fuerte, pero no inteligente. Si se le ordena llevar a cabo una tarea, la llevará a cabo de un modo sistemático, lento y ejecutando las instrucciones de un modo literal, sin cuestionamiento ninguno.

Los sistemas automáticos tienen una diferencia con los golem: son rápidos. Todo lo demás les aplica. Los hemos diseñado a partir del pensamiento analítico: hemos conseguido una colección de trocitos de máxima eficiencia, pero de comportamiento demenciado porque les faltan dos cosas:

· comprender y gestionar su propio comportamiento agregado y

· ser capaces de escuchar y aceptar la respuesta del “otro” para ajustar el comportamiento.

El primer punto es más importante cuanto más complicado es el sistema, porque hay demasiados trocitos separados para conseguir un comportamiento coherente: imagina hacer caminar un cuerpo sin un lugar del cerebro donde ese verbo se traduzca a todos los movimientos musculares que hagan falta.

El segundo punto es más importante cuanto más crítico, más intenso o más presente en nuestras vidas sea el sistema: imagina que tu padre no te reconociera.

La solución necesariamente debe ser aportar la pieza que no tienen los Golem, ésa que les permita gestionar su comportamiento hacia cada uno de nosotros. Los técnicos dirían que falta el componente con el que gestionar el comportamiento emergente del sistema complejo de acuerdo al contexto de cada cliente.

En este artículo vamos a hablar del comportamiento emergente o lo que podríamos llamar el despertar de la conciencia.

1. “Once you see, you can’t unsee” – Una vez que ves, no puedes dejar de ver

Este vídeo es muy esclarecedor.

Todas las bolitas van en línea recta, a velocidad constante, yendo y viniendo entre dos puntos.

Sin embargo, en cuanto hay varias, aparece un nuevo elemento, una rueda, que gira dentro del perímetro del círculo.

Las bolitas no saben nada de la rueda, como la rueda no sabe nada de las bolitas. Si miras unas, no ves la otra y al revés.

La rueda es el comportamiento emergente del sistema compuesto por las bolitas.

Las bolitas no saben nada de la rueda que componen. Desde cada una de ellas no se puede imaginar ni ver la rueda. Por ello, este sistema del que hablamos es un sistema complejo, en el que surge ese “algo” que no solo se debe a sus componentes, sino a la relación que existe entre ellos. Hay miles de ejemplos a nuestro alrededor (las bandadas de peces, el agua… incluso las empresas), pero el más perfecto es el ser humano: mirando nuestros órganos no es posible imaginar la persona que somos y que es con la que los demás humanos se relacionan.

2. El elefante en la habitación… ahora que lo vemos

Desde la Ilustración, el enfoque científico más aceptado se ha basado en el reduccionismo, que divide las cosas en sus componentes para poder comprenderlas mejor. Esta estrategia ha aportado enormes éxitos en el campo científico, pero supone un problema en el estudio de los comportamientos emergentes, que es justamente lo que se deja de ver al dividir lo que se estudia.

En medicina, por ejemplo, si ya se hablaba de prácticas médicas conocidas en Mesopotamia, hace 6.000 años, tuvo que terminar el siglo XIX para que naciera la psiquiatría como la primera disciplina que se dedica al estudio del comportamiento global del ser humano, su conducta, como algo diferente del comportamiento de sus órganos.

Hoy, la neurociencia cognitiva agrupa varias ramas del saber que se ocupan de la conducta y todas se basan en que el ser humano, gracias al buen funcionamiento de la corteza frontal de su cerebro, tiene consciencia de su comportamiento. Esta consciencia subjetiva es algo que no todos los animales tienen, ni siquiera todos los primates.

En tecnología es necesario tener sistemas capaces de consciencia, es decir, de reflexionar y decidir sobre lo que hacen de acuerdo con el contexto, aunque la reflexión y la decisión siguen siendo un rol humano y sólo humano: incluso con inteligencia artificial para mirar los datos, solo un humano decidirá qué acción implantar o qué corregir, pero, sin esa consciencia de conducta, no podremos evitar los #Golems.

La idea de este módulo nuevo lleva tiempo en nuestras mentes: muchos lectores creerán haber reconocido el CRM y sin embargo el CRM solo es una memoria de largo plazo, que necesita el análisis de un humano para reconocer qué va bien o qué va mal, a veces después de un esfuerzo de algunos minutos. Otros lectores habrán pensado en un Big Data y tampoco: el big data también es una memoria de largo plazo, de la que puedes sacar conclusiones estadísticas, pero tampoco es suficiente para corregir las acciones de un caso mientras ocurre.

Si buscáramos la analogía en el mundo humano, estamos sin duda en la época “pre-psiquiatría”. El estudio del comportamiento de los #Golem y su impacto, ese enorme elefante en la habitación de nuestro mundo digital, todavía está huérfano: no se encuentra en ningún currículum de tecnología ni se oye hablar de él en las escuelas de negocios.

3. El gemelo digital: la consciencia en el mundo automatizado

Según Wikipedia, “la consciencia es la capacidad del ser de reconocer la realidad circundante y de relacionarse con ella así como el conocimiento inmediato o espontáneo que el sujeto tiene de sí mismo, de sus actos y reflexiones”. Es decir, un conocimiento subjetivo del impacto de su propio comportamiento en la realidad circundante, independiente del propio comportamiento.

Nuestros sistemas demenciados ejecutan las órdenes de forma automática: sin preguntas ni objetivos. El #Golem no tiene consciencia, el equivalente a una corteza frontal cerebral, un módulo de córtex en el cual observar su propio comportamiento, el de todo el sistema, y poder cambiarlo de acuerdo con su impacto en el contexto, que es lo crucial.

El módulo de córtex no puede estar entre los sistemas internos, como nuestro lóbulo frontal no podría estar en ninguno de nuestros órganos especialistas, sino que recibe alguna información de ellos, muy poca, y se ocupa de verificar el correcto funcionamiento de los intercambios externos, de acuerdo con parámetros que el mundo interno ignora completamente, como por ejemplo los modales.

Un ejemplo: si tenemos un problema de náuseas cuando estamos en una presentación comercial, sabremos disculparnos y salir tan corriendo como nuestra impresión interna nos requiera. Es muy poca información, pero es suficiente. Lo importante es saber cómo disculparnos antes de salir corriendo y saber con quién debemos disculparnos de nuevo cuando volvamos de la emergencia, o saber a quién preguntárselo. Nuestra digestión es la misma que la de la mayoría de los mamíferos. Nuestra capacidad de aprendizaje y relación es lo que nos distingue.

Por lo tanto, un módulo de córtex necesita un gemelo digital de las relaciones importantes del sistema con el exterior y la capacidad de actuar sobre los intercambios cuando las cosas no parecen ir como deben. Las relaciones importantes pueden ser los clientes, pero también los empleados y, en un ecosistema digital como el nuestro, sin duda los proveedores.

Volviendo a nuestro sistema de bolitas y rueda, el módulo de córtex se ocupa exclusivamente del comportamiento de la rueda y de traducir los cambios en la rueda a las bolitas. Si no existe un lugar adecuado desde donde observar el comportamiento de nuestra rueda, podríamos perfectamente no verla.

Por eso se nos está llenando el mundo de #Golems.

4. Biomimetismo: la inspiración para gestionar complejidad

Hemos hablado de sistemas complejos y de comportamientos emergentes. Nuestro ejemplo eran seis bolitas en una línea recta, todas igualitas, para dar nacimiento a una rueda que se movía en un círculo estático. Es decir, algo muy sencillo comparado con el problema al que se enfrentan las compañías que han recurrido a la automatización y que, por la dificultad de su negocio, están sufriendo el efecto #Golem.

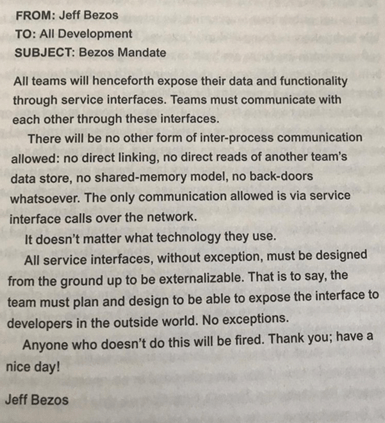

En su libro Thinking in Systems[1], Donella Meadows define la jerarquía como un brillante mecanismo para reducir complejidad, es decir reducir los intercambios de información, y por lo tanto independizar elementos entre sí. La jerarquía así entendida es lo que permite soportar la complejidad funcional de los animales más resistentes, desde los insectos hasta los humanos. En nuestro cuerpo, los órganos son claramente especialistas y se agrupan en sistemas que intentan compartir lo menos posible, hasta el punto de que en algún caso se pueden intercambiar o sustituir por máquinas. En realidad, es algo muy parecido a esto de lo que habla Jeff Bezos en este memo en el que habla de la arquitectura de la compañía.

Traducido, dice que:

-Cada uno de los sistemas o equipo (bolitas) tiene que exponer su funcionalidad a través de un servicio y sólo eso.

-Si está muy bien hecho, gente del mundo exterior querrá utilizarlo y quizás pagar por ello.

–Compite con cualquier otro servicio equivalente del mundo, así que si no está bien hecho, se dejará de utilizar y el equipo se deshará.

Y no dice, pero se entiende, que el comportamiento del organismo entero se dirige desde un solo órgano, el cerebro, que podrá recoger la información que estrictamente necesite.

Esta arquitectura que Amazon ordena a sus equipos tiene dos implicaciones monumentales:

–Los silos no se rompen, al revés: se estructuran y se aíslan del todo.

–El módulo de córtex, que controla la relación con el exterior, utiliza los servicios internos o externos, y no tiene discusión interna.

Mirando cómo funcionan los organismos vivos más evolucionados, o las tecnologías más maduras, esto parece el camino de madurez del software. Por lo que dice Bezos, también podría ser el de las organizaciones.

5. En resumen

Cuando nuestros sistemas son suficientemente complicados crean sistemas complejos que tienen comportamientos emergentes, eso que proviene de la interrelación de los componentes del sistema.

Para gestionar el comportamiento emergente es necesario un sistema adicional. En el humano, eso está en la corteza frontal cerebral. Si estamos en el mundo automático ese módulo debe ser un gemelo digital del sistema desde el cual poder observar el comportamiento y cambiarlo si hay problemas.

El conocimiento y entrenamiento de este módulo, el estudio de su impacto en las relaciones de sistemas y humanos aún no forma parte del conocimiento general. Por eso es terreno de disrupción. Y tú, estimado lector, si me has seguido hasta aquí, ya nunca podrás dejar de verlo.

También se puede leer esta publicación en Atlas Tecnológico