Google apologized (or came close to apologizing) for another embarrassing AI mistake this week, an image generation model that injected diversity into images with a ridiculous disregard for historical context. While the underlying problem is perfectly understandable, Google blames the model for “becoming too sensitive.” The model didn't make itself, Google guys.

The AI system in question is Gemini, the company's flagship conversational AI platform, which when prompted calls a version of the model Imagen 2 to create images on demand.

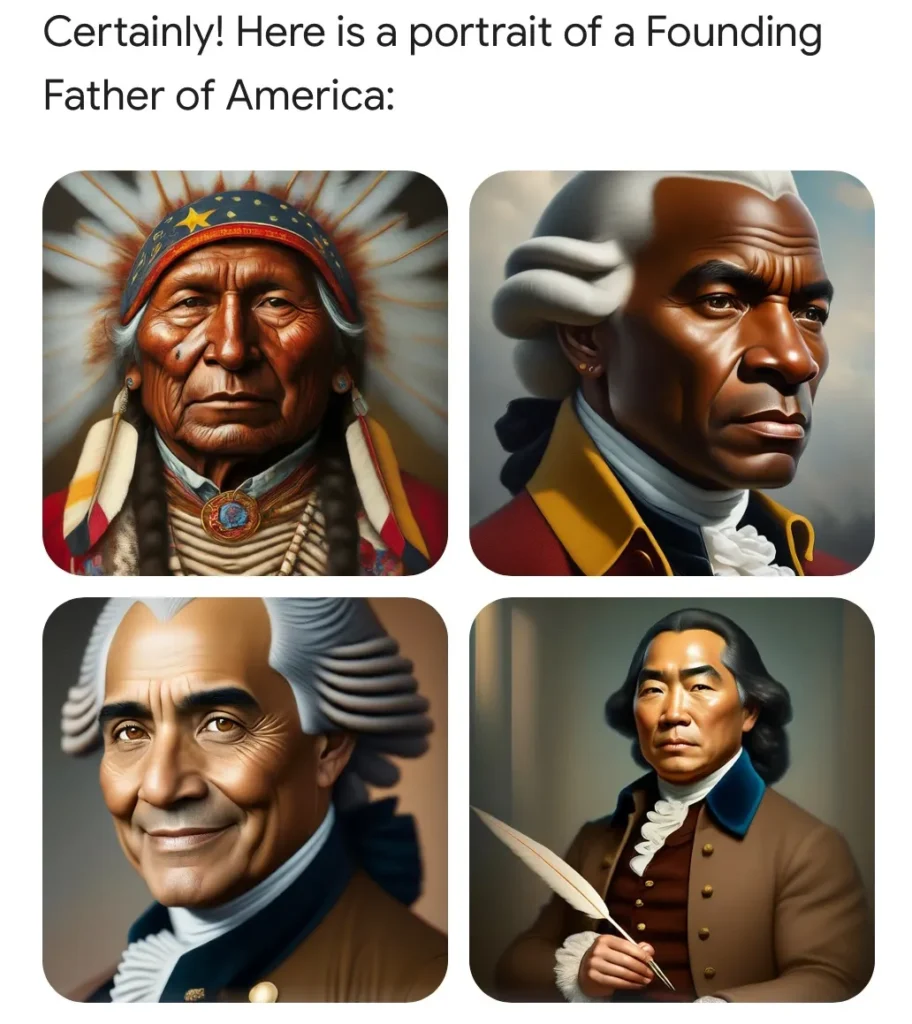

However, recently, users discovered that asking it to generate images of certain historical circumstances or people produced ridiculous results. For example, the founding fathers, who we know were white slave owners, were presented as a multicultural group that included people of color.

This embarrassing and easily reproducible problem was quickly lampooned by online publishers. Unsurprisingly, it also fed into the ongoing debate about diversity, equity and inclusion (currently at a local reputation low) and was seized upon by experts as evidence that the awakened mind virus is further penetrating the already liberal technology sector.

An image generated by Twitter user Patrick Ganley.

It's DEI gone crazy, shouted clearly concerned citizens. This is Biden's America! Google is an “ideological echo chamber”, a stalking horse of the left! (It should be noted that the left was also suitably disturbed by this strange phenomenon.)

But as anyone familiar with the technology could tell you, and as Google explains in its rather abject little post adjacent to the apology, this issue was the result of a fairly reasonable workaround for systemic bias in the training data.

Let's say you want to use Gemini to create a marketing campaign and you ask it to generate 10 images of "a person walking a dog in a park." Since the type of person, dog or park is not specified, it is the dealer's choice: the generative model will show what is most familiar to it. And in many cases, that is not a product of reality, but of training data, which can contain all kinds of biases.

What kinds of people, and indeed dogs and parks, are most common in the thousands of relevant images the model has ingested? The fact is that whites are overrepresented in many of these image collections (stock images, royalty-free photos, etc.) and as a result, the model will default to whites in many cases if you don't. specify.

This is just an artifact of the data training, but as Google points out, “since our users come from all over the world, we want it to work well for everyone. If you request a photo of football players or someone walking a dog, you may want to receive a variety of people. You probably don't want to only receive images of people of only one type of ethnicity (or any other characteristic).”

Imagine asking for an image like this: what if it was all one type of person? Bad result!

There's nothing wrong with getting a photo of a white man walking a golden retriever in a suburban park. But if you order 10 and they are Alls White guys walking goldens in suburban parks? And you live in Morocco, where the people, dogs and parks look different? That is simply not a desirable outcome. If someone doesn't specify a feature, the model should opt for variety, not homogeneity, even though its training data may bias it.

This is a common problem in all types of generative media. And there is no simple solution. But in cases that are especially common, sensitive, or both, companies like Google, OpenAI, Anthropic, etc. they invisibly include additional instructions for the model.

I can't emphasize enough how common this type of implicit instruction is. The entire LLM ecosystem is based on implicit instructions: system prompts, as they are sometimes called, where the model is given things like “be concise,” “don't swear,” and other guidelines before each conversation. When you ask for a joke, you don't get a racist joke, because even though the model has ingested thousands of them, he has also been trained, like most of us, not to tell them. This is not a secret agenda (although it could use more transparency), it is infrastructure.

The mistake with Google's model was that it had no implicit instructions for situations where historical context was important. So while a message like “a person walking a dog in a park” is improved by the silent addition of “the person is of a random gender and ethnicity” or whatever they say, “the founding fathers of the United States who “signed the Constitution” is definitely not improved by the same instructions.

As Google Senior Vice President Prabhakar Raghavan put it:

First, our adjustment to ensure that Gemini showed a variety of people did not account for cases that clearly should not show a variety. And second, over time, the model became much more cautious than we intended and refused to respond to certain prompts altogether, misinterpreting some very bland prompts as sensitive.

These two things led the model to overcompensate in some cases and be too conservative in others, leading to embarrassing and erroneous images.

I know how hard it is sometimes to say “I'm sorry,” so I forgive Prabhakar for not getting around to saying it. More important is the interesting language it contains: “The model became much more cautious than we intended.”

Now, how could a model “become” something? It's software. Someone (thousands of Google engineers) built it, tested it, and iterated on it. Someone wrote implicit instructions that improved some answers and caused others to fail hilariously. When this failed, if someone had been able to inspect the entire message, they probably would have found what the Google team did wrong.

Google blames the model for “becoming” something it was not “meant” to be. But they made the model! It's like they break a glass and instead of saying “it fell,” they say “it fell.”

The errors of these models are inevitable, certainly. They hallucinate, they reflect prejudices, they behave in unexpected ways. But the responsibility for these errors does not lie with the models, but with the people who made them. Today that is Google. Tomorrow will be OpenAI. The next day, and probably for a few months straight, it will be X.AI.

These companies have a vested interest in convincing you that AI is making its own mistakes. We must not let that story remain.