A human wrote that, or ChatGPT? It may be difficult to know, perhaps too difficult, its creator thinks OpenAI, which is why it's working on a way to "watermark" AI-generated content.

With a conference at the University of Austin, computer science professor Scott Aaronson, currently a visiting researcher at OpenAI, revealed that a tool is being developed to “statistically watermark the results of an AI text.” Whenever a system, for example ChatGPT, generates text, the tool embeds a "silver secret signal" that indicates where the text came from.

the engineer of OpenAIHendrik Kirchner built a working prototype, Aaronson says, and the hope is to incorporate it into future systems developed by OpenAI.

“We want to make it much more difficult to take an AI output and pass it off as coming from a human,” Aaronson said in his comments. “This could be useful for preventing academic plagiarism, obviously, but also, for example, mass propaganda generation, you know, spamming every blog with seemingly on-topic comments supporting the Russian invasion of Ukraine without even a building full of trolls in Moscow, or impersonating someone's writing style to frame them."

Exploiting randomness

Why the need for a watermark? ChatGPT is a good example. The chatbot developed by OpenAI he has taken the internet by storm, displaying an aptitude not only for answering challenging questions, but also for writing poetry, solving programming puzzles, and waxing poetic on a host of philosophical subjects.

While ChatGPT is a lot of fun and really useful, the system raises obvious ethical concerns. Like many of the text generation systems before it, ChatGPT could be used to write high-quality phishing emails and harmful malware, or cheat on school assignments. And as a question-answering tool, it's factually inconsistent, a shortcoming that led to programming question-and-answer site Stack Overflow banning answers originating from ChatGPT until further notice.

To understand the technical fundamentals of the watermark tool OpenAI, it's useful to know why systems like ChatGPT work as well as they do. These systems understand the input and output text as strings of "tokens", which can be words but also punctuation marks and parts of words. In essence, the systems are constantly generating a mathematical function called a probability distribution to decide the next token (eg word) to generate, taking into account all previously issued tokens.

In the case of systems hosted on OpenAI like ChatGPT, after the distribution is built, the chat server OpenAI does the job of sampling tokens according to the distribution. There is some randomness in this selection; that's why the same text message can generate a different response.

The watermark tool OpenAI it acts as a "wrapper" over existing text generation systems, Aaronson said during the conference, taking advantage of a cryptographic function that runs at the server level to "pseudo-randomly" select the next token. In theory, the system-generated text would still appear random to you or me, but anyone holding the "key" to the cryptographic feature could discover a watermark.

“Empirically, a few hundred tokens seems to be enough to get a reasonable signal that yes, this text is coming from an AI system. In principle, you could even take a long text and isolate which parts are probably coming from the AI system and which parts are probably not.” Aaronson said. “The tool can do the watermark using a secret key and can verify the watermark using the same key.”

key limitations

AI-generated text watermarking is not a new idea. Previous attempts, mostly rule-based, have relied on techniques like synonym substitutions and syntax-specific word changes. But out of the theoretical the investigation report published by the German institute CISPA last March, on OpenAI seems to be one of the first crypto-based approaches to the problem.

When contacted for comment, Aaronson declined to reveal more about the watermark prototype, other than that he hopes to co-author a research paper in the coming months. OpenAI it also declined, saying only that watermarking is among several "provenance techniques" it is exploring to detect AI-generated results.

However, unaffiliated academics and industry experts shared mixed opinions. They point out that the tool is server-side, meaning it wouldn't necessarily work with all text-generating systems. And they argue that it would be trivial for adversaries to avoid it.

"I think it would be quite easy to get around it by rephrasing, using synonyms, etc.," said Srini Devadas, a professor of computer science at MIT. "This is a bit of a tug of war."

Jack Hessel, a research scientist at the Allen Institute for AI, noted that it would be difficult to take imperceptible fingerprints of AI-generated text because each token is a discrete choice. A fingerprint that is too obvious may result in the choice of strange words that degrade fluency, while a fingerprint that is too subtle would leave room for doubt when searching for the fingerprint.

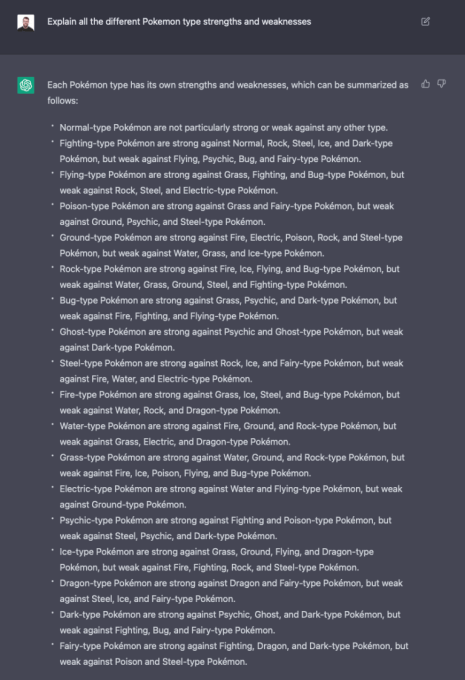

ChatGPT answering a question.

Yoav Shoham, co-founder and co-CEO of AI21 Labs, a rival to OpenAI, doesn't think statistical watermarking is enough to help identify the source of AI-generated text. He calls for a "more comprehensive" approach that includes differential watermarks, in which different parts of text are marked differently, and AI systems that more accurately cite sources of factual text.

This specific watermarking technique also requires placing a lot of trust and power in OpenAIthe experts pointed out.

“An ideal fingerprint would not be perceptible by a human reader and would allow highly reliable detection,” Hessel said by email. "Depending on how it's set up, it could be that OpenAI is the only party able to provide that detection with confidence because of how the 'signature' process works."

In his lecture, Aaronson acknowledged that the scheme would really only work in a world where companies like OpenAI they are at the forefront of expanding state-of-the-art systems, and they all agree to be responsible players. Even if OpenAI shared the watermarking tool with other providers of text generation systems, such as Cohere and AI21Labs, this would not prevent others from choosing not to use it.

"If it becomes a free-for-all game, then many of the security measures become more difficult, and might even be impossible, at least without government regulation," Aaronson said. “In a world where anyone could build their own text model that was just as good as ChatGPT, for example… What could be done there?”

This is how it plays out in the realm of text to image. Unlike OpenAI, whose DALL-E 2 imaging system is only available via an API, Stability AI opened up its text-to-image technology (called Stable Diffusion). While DALL-E 2 has a number of filters at the API level to prevent problematic images from being generated (in addition to watermarks on the images it generates), the open source Stable Diffusion does not. Bad actors have used it to create fake porn, among other toxicities.

For his part, Aaronson is optimistic. At the conference, he expressed the belief that if OpenAI can demonstrate that watermarks work and do not affect the quality of the generated text, it has the potential to become an industry standard.

Not everyone agrees. As Devadas points out, the tool requires a key, which means it can't be fully open source, which could limit its adoption to organizations that agree to partner with it. OpenAI. (If the key were made public, anyone could guess the pattern behind the watermarks, defeating their purpose.)

But it may not be so far-fetched. A Quora representative said that the company would be interested in using such a system and that it probably wouldn't be the only one.

“You might worry that this whole business of trying to be safe and responsible when scaling AI…as soon as it seriously hurts the bottom line for Google, Meta, Alibaba and the other major players, a lot of it will go out the window,” Aaronson said. “On the other hand, we have seen in the last 30 years that large Internet companies can agree on certain minimum standards, whether it be out of fear of being sued, a desire to be seen as a responsible gambler, or for any other reason. reason".

Very nice post. I just stumbled upon your blog and wanted to say that I've really enjoyed browsing your blog posts. In any case I'll be subscribing to your feed and I hope you write again soon!