Inteligencia Artificial

Cuando somos niños podemos responder problemas de matemáticas de la escuela primaria simplemente completando las respuestas.

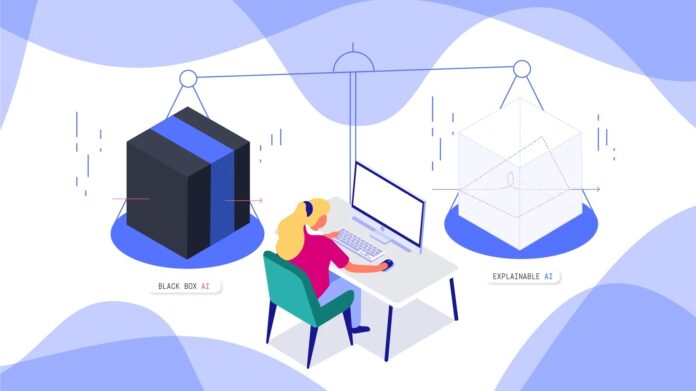

Pero cuando no “se demostraba el trabajo”, los profesores restaban puntos; la respuesta correcta no valía mucho sin una explicación. Sin embargo, esos elevados estándares de explicabilidad en divisiones largas de alguna manera no parecen aplicarse a los sistemas de IA, incluso a aquellos que toman decisiones cruciales que impactan la vida.

Los principales actores de la IA que ocupan los titulares de hoy y alimentan los frenesíes del mercado de valores (OpenAI, Google, Microsoft) operan sus plataformas en modelos de caja negra. Una consulta entra por un lado y una respuesta sale por el otro, pero no tenemos idea de qué datos o razonamiento utilizó la IA para proporcionar esa respuesta.

La mayoría de estas plataformas de IA de caja negra se basan en un marco tecnológico de décadas de antigüedad llamado «red neuronal». Estos modelos de IA son representaciones abstractas de las grandes cantidades de datos con las que se entrenan; no están directamente conectados a los datos de entrenamiento. Por lo tanto, las IA de caja negra infieren y extrapolan basándose en lo que creen que es la respuesta más probable, no en datos reales.

A veces, este complejo proceso predictivo se sale de control y la IA «alucina». Por naturaleza, la IA de caja negra es inherentemente poco confiable porque no se le puede responsabilizar por sus acciones. Si no puede ver por qué o cómo la IA hace una predicción, no tiene forma de saber si utilizó información o algoritmos falsos, comprometidos o sesgados para llegar a esa conclusión.

Si bien las redes neuronales son increíblemente poderosas y llegaron para quedarse, hay otro marco de IA que pasa desapercibido y que está ganando prominencia: el aprendizaje basado en instancias (IBL). Y es todo lo que las redes neuronales no son. IBL es una IA en la que los usuarios pueden confiar, auditar y explicar. IBL rastrea cada decisión hasta los datos de entrenamiento utilizados para llegar a esa conclusión.

IBL puede explicar cada decisión porque la IA no genera un modelo abstracto de los datos, sino que toma decisiones a partir de los datos mismos. Y los usuarios pueden auditar la IA construida sobre IBL, interrogarla para descubrir por qué y cómo tomó decisiones y luego intervenir para corregir errores o sesgos.

Todo esto funciona porque IBL almacena datos de entrenamiento (“instancias”) en la memoria y, alineado con los principios de “vecinos más cercanos”, hace predicciones sobre nuevas instancias dada su relación física con las instancias existentes. IBL se centra en los datos, por lo que los puntos de datos individuales se pueden comparar directamente entre sí para obtener información sobre el conjunto de datos y las predicciones. En otras palabras, IBL “muestra el trabajo que realiza”.

El potencial de una IA tan comprensible es claro. Las empresas, los gobiernos y cualquier otra entidad regulada que desee implementar IA de una manera confiable, explicable y auditable podrían usar IBL AI para cumplir con los estándares regulatorios y de cumplimiento. IBL AI también será particularmente útil para cualquier aplicación donde las acusaciones sean parciales: contratación, admisiones universitarias, casos legales, etc.

Las empresas ya están utilizando IBL. Por ejemplo, se puede crear un marco IBL comercial utilizado por clientes como grandes instituciones financieras para detectar anomalías en los datos de los clientes y generar datos sintéticos auditables que cumplan con el Reglamento general de protección de datos (GDPR) de la UE.

Por supuesto, IBL no está exento de desafíos. El principal factor limitante para IBL es la escalabilidad, que también fue un desafío al que se enfrentaron las redes neuronales durante 30 años hasta que la tecnología informática moderna las hizo viables. Con IBL, cada dato debe consultarse, catalogarse y almacenarse en la memoria, lo que se vuelve más difícil a medida que crece el conjunto de datos.

Sin embargo, los investigadores están creando sistemas de consulta rápida basados en avances en la teoría de la información para acelerar significativamente este proceso. Esta tecnología de vanguardia ha permitido a IBL competir directamente con la viabilidad computacional de las redes neuronales.

A pesar de estos desafíos, el potencial del IBL es claro. A medida que más y más empresas buscan una IA segura, explicable y auditable, las redes neuronales de caja negra ya no serán suficientes. Una empresa, ya sea una pequeña startup o una empresa más grande, debe empezar a trabajar en modelos IBL para lo q se acompañan algunos consejos a continuación.

Mentalidad ágil y abierta

Con IBL, funciona mejor explorar sus datos en busca de información que puedan generarse, en lugar de asignarles una tarea particular, como «predecir el precio óptimo» de un artículo. Mantener la mente abierta y dejar que la IBL guíe sus propios aprendizajes. IBL puede decir que no puede predecir muy bien un precio óptimo a partir de un conjunto de datos determinado, pero puede predecir las horas del día en que las personas realizan la mayor cantidad de compras, o cómo se comunican con la empresa y qué artículos es más probable que compren.

IBL es un marco de IA ágil que requiere comunicación colaborativa entre los tomadores de decisiones y los equipos de ciencia de datos, no el habitual «lanzar una pregunta al espejo de popa, esperar su respuesta» que vemos en muchas organizaciones que implementan IA hoy en día.

«Menos es más» para los modelos de IA

En la IA tradicional de caja negra, se entrena y optimiza un único modelo para una única tarea, como la clasificación. En una gran empresa, esto podría significar que hay miles de modelos de IA que gestionar, lo que resulta caro y difícil de manejar. Por el contrario, IBL permite un análisis versátil y multitarea. Por ejemplo, se puede utilizar un único modelo IBL para el aprendizaje supervisado, la detección de anomalías y la generación de datos sintéticos, sin dejar de proporcionar una explicabilidad total.

Esto significa que los usuarios de IBL pueden crear y mantener menos modelos, lo que permite una caja de herramientas de IA más ágil y adaptable. Si se está adoptando IBL, se necesitan programadores y científicos de datos, pero no es necesario invertir en cientos de altos técnicos con experiencia en IA.

Combinar el conjunto de herramientas de IA

Las redes neuronales son excelentes para cualquier aplicación que no necesite explicación ni auditoría. Pero cuando la IA ayuda a las empresas a tomar decisiones importantes, como gastar millones de dólares en un nuevo producto o completar una adquisición estratégica, debe ser explicable. E incluso cuando la IA se utiliza para tomar decisiones más pequeñas, como contratar a un candidato o ascender a alguien, la explicabilidad es clave. Nadie quiere saber que se perdieron un ascenso debido a una decisión inexplicable y de caja negra.

Y las empresas pronto se enfrentarán a litigios en este tipo de casos. Hay que elegir los marcos de IA según su aplicación; optar por redes neuronales si solo se desea una rápida ingesta de datos y una rápida toma de decisiones, y usar IBL cuando se necesiten decisiones confiables, explicables y auditables.

El aprendizaje basado en instancias no es una tecnología nueva. Durante las últimas dos décadas, los científicos informáticos han desarrollado IBL en paralelo con las redes neuronales, pero IBL ha recibido menos atención pública. Ahora IBL está ganando nueva atención en medio de la actual carrera armamentista de IA. IBL ha demostrado que puede escalar manteniendo la explicabilidad: una alternativa bienvenida a las alucinaciones de redes neuronales que arrojan información falsa y no verificable.

Con tantas empresas adoptando ciegamente la IA basada en redes neuronales, el próximo año sin duda veremos muchas filtraciones de datos y demandas por acusaciones de sesgo y desinformación.

Una vez que los errores cometidos por la IA de caja negra comiencen a afectar la reputación de las empresas… ¡y sus resultados! Es de esperar que la IBL, lenta y constante, tenga su momento de gloria. Todos aprendimos la importancia de “mostrar nuestro trabajo” en la escuela primaria, y ciertamente podemos exigir ese mismo rigor a la IA que decide los rumbos de nuestras vidas.